Seeing Sound: Investigating the Effects of Visualizations and Complexity on Crowdsourced Audio Annotations

Cartwright, M., Seals, A., Salamon, J., Williams, A., Mikloska, S., MacConnell, D., Law, E., Bello, J.P., Nov, O. Seeing Sound: Investigating the Effects of Visualizations and Complexity on Crowdsourced Audio Annotations. In Proceedings of the ACM on Human-Computer Interaction, vol. 1(2): Computer-Supported Cooperative Work and Social Computing, 2017.

Abstract

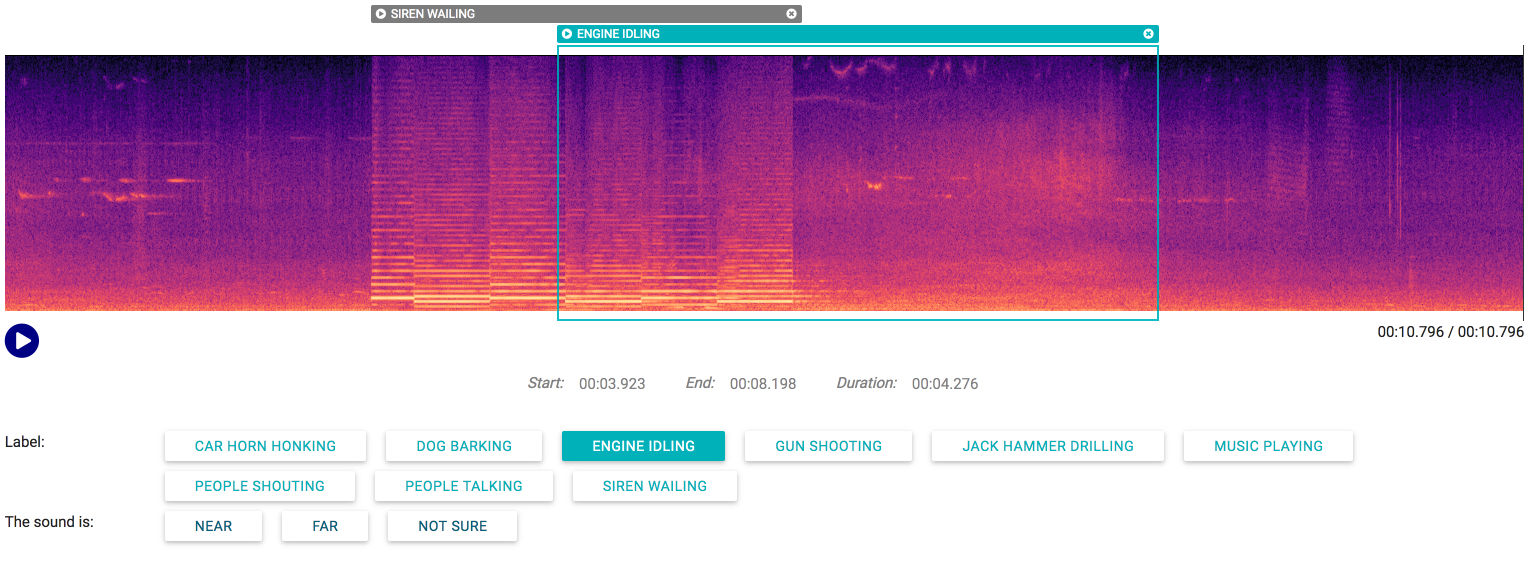

Audio annotation is key to developing machine-listening systems; yet, effective ways to accurately and rapidly obtain crowdsourced audio annotations is understudied. In this work, we seek to quantify the reliability/ redundancy trade-off in crowdsourced soundscape annotation, investigate how visualizations affect accuracy and efficiency, and characterize how performance varies as a function of audio characteristics. Using a controlled experiment, we varied sound visualizations and the complexity of soundscapes presented to human annotators. Results show that more complex audio scenes result in lower annotator agreement, and spectrogram visualizations are superior in producing higher quality annotations at lower cost of time and human labor. We also found recall is more affected than precision by soundscape complexity, and mistakes can be often attributed to certain sound event characteristics. These findings have implications not only for how we should design annotation tasks and interfaces for audio data, but also how we train and evaluate machine-listening systems.

Resources

- Code: The Audio Annotator

- Data: Seeing Sound Dataset