Projects

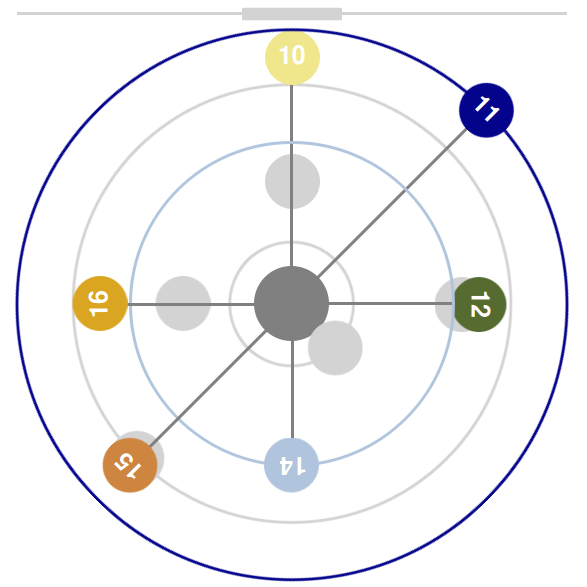

Machine Listening for Environmental Sound Understanding

Machine listening is the auditory sibling of computer vision and aims to extract meaningful information from audio signals. It has the potential to provide valuable information for numerous tasks including understanding and improving the health of our cities (e.g., monitoring and mitigating noise pollution), natural environments (e.g., monitoring and conserving biodiversity), and more. In our research, we investigate problems all along the machine listening pipeline, from both human and technical perspectives, with the aim of building better tools for understanding sound at scale.

Selected publications (see all)

- Active Few-Shot Learning for Sound Event Detection (INTERSPEECH)

- Urban Rhapsody: Large-scale Exploration of Urban Soundscapes (EuroVis)

- Weakly Supervised Source-Specific Sound Level Estimation in Noisy Soundscapes (WASPAA)

- SONYC-UST-V2: An Urban Sound Tagging Dataset with Spatiotemporal Context (DCASE)

- Voice Anonymization in Urban Sound Recordings (MLSP)

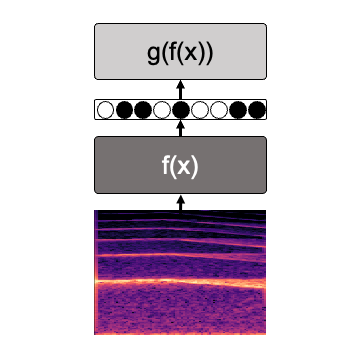

Audio Representation Learning

We aim to learn learn compact, semantically-rich representations from large amonts of unlabeled data, which can then be transferred and used in related downstream tasks with limited labeled data. We investigate both methods to learn these representation as well as how to effectively use these representations.

Selected publications (see all)

- A Study on Robustness to Perturbations for Representations of Environmental Sound (EUSIPCO)

- Specialized Embedding Approximation for Edge Intelligence: A Case Study in Urban Sound Classification (ICASSP)

- TriCycle: Audio Representation Learning from Sensor Network Data Using Self-Supervision (WASPAA)

- EdgeL3: Compressing L3-Net for Mote Scale Urban Noise Monitoring (PAISE)

Crowdsourced Audio Annotation and Quality Evaluation

To address the needs of modern, data hungry machine learning algorithms, audio researchers often turn to crowdsourcing with the aim of hastening and scaling their efforts for audio annotation and audio quality evaluation. We research best practices for performing crowdsourced audio annotation and quality evaluation with the aim of increasing annotation quality, throughput, and user engagement.

Selected publications (see all)

- Eliciting Confidence for Improving Crowdsourced Audio Annotations (PACM)

- Crowdsourcing Multi-label Audio Annotation Tasks with Citizen Scientists (CHI)

- Crowdsourced Pairwise-Comparison for Source Separation Evaluation (ICASSP)

- Seeing Sound: Investigating the Effects of Visualizations and Complexity on Crowdsourced Audio Annotations (PACM)

- Fast and Easy Crowdsourced Perceptual Audio Evaluation (ICASSP)

Human-Centered Audio Production Tools

Audio production tools aid audio producers and engineers in the creation of the audio content we listen to in music, film, games, podcasts, and more. However, commercial audio production tools are often not designed for everyone but rather for professionals and serious hobbyists with particular knowledge, experience, and abilities. We research audio production tools aimed at addressing users’ needs that aren’t met by commercial developers. For example, to address the needs of novices, we reframe the controls to work within the interaction paradigms identified by research on how audio engineers and musicians communicate auditory concepts to each other: evaluative feedback, natural language, vocal imitation, and exploration. And more recently, we have begun investigating how to address the audio production needs of deaf and hard of hearing users.

Selected publications (see all)

- How people who are deaf, Deaf, and hard of hearing use technology in creative sound activities (ASSETS)

- VocalSketch: Vocally Imitating Audio Concepts (CHI)

- SynthAssist: Querying an Audio Synthesizer by Vocal Imitation (NIME)

- Mixploration: Rethinking the Audio Mixer Interface (IUI)

- Social-EQ: Crowdsourcing an Equalization Descriptor Map (ISMIR)

Music Information Retrieval

Music information retrieval (MIR) aims to extract information from music and has applications in automatic music transcription, music recommendation, computational musicology, music generation, and more. We investigate new methods, data, and tools to support MIR tasks.

Selected publications (see all)

- MONYC: Music of New York City Dataset (DCASE)

- Few-Shot Drum Transcription in Polyphonic Music (ISMIR)

- Open-Source Practices for Music Signal Processing Research: Recommendations for Transparent, Sustainable, and Reproducible Audio Research (IEEE SPM)

- Increasing Drum Transcription Vocabulary Using Data Synthesis (DAFx)

- Crowdsourcing a Real-World On-Line Query By Humming System (SMC)